The core principle of video translation software is: recognizing text from the speech sounds in the video, translating that text into the target language, then dubbing the translated text, and finally embedding the dubbing and text into the video.

It can be seen that the first step is to recognize text from the speech sounds in the video. The accuracy of this recognition directly affects the subsequent translation and dubbing.

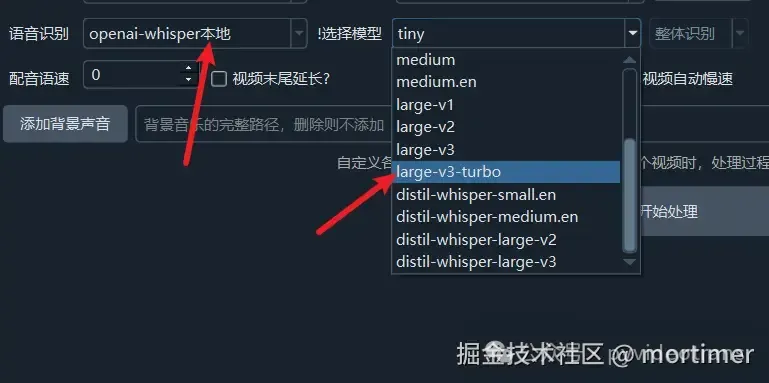

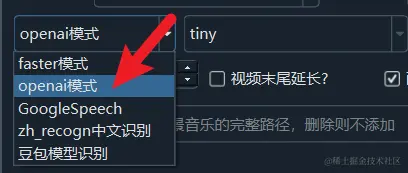

openai-whisper Local Mode

This mode uses the official OpenAI open-source Whisper model. Compared to the faster mode, it is slower but has the same accuracy.

The model selection method on the right is the same. From tiny to large-v3, the computer resource consumption increases, and the accuracy improves accordingly.

Note: Although the model names are mostly the same between the faster mode and the openai mode, the models are not interchangeable. Please go to https://github.com/jianchang512/stt/releases/0.0 to download the models for the openai mode.

large-v3-turbo Model

OpenAI-Whisper recently released a model called large-v3-turbo, which is optimized based on large-v3. Its recognition accuracy is similar to the former, while its size and resource consumption are significantly reduced. It can be used as a replacement for large-v3.

How to Use

Upgrade the software to version v2.67.

After selecting speech recognition, choose "openai-whisper local" from the dropdown menu.

In the model dropdown menu, select "large-v3-turbo".

Download the

large-v3-turbo.ptfile to themodelsfolder within the software directory.